When talking about AI, it’s important to grasp the concept of “tokens.” Tokens are the fundamental building blocks of input and output that Large Language Models (LLMs) use. AI tokens are the smallest units of data used by a language model to process and generate text. Tokenization is how these LLMs break down your input to understand it and generate an output in human language so that it can be useful to you. This blog covers what tokens are in AI language models, their limits, and how the latest AI requirements management tools use them.

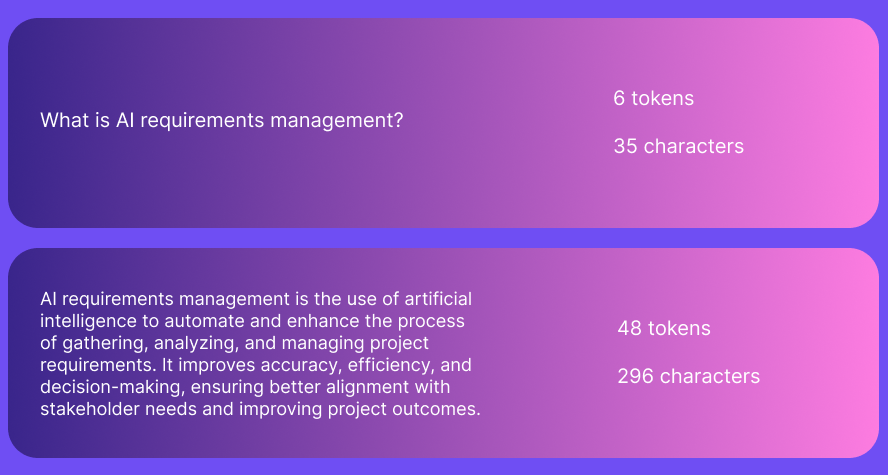

A typical interaction in ChatGPT may have the following ChatGPT token consumption:

The number of tokens you consume depends on the AI model you are using. For OpenAI products, GPT 4o mini is ideal for developers, BAs, QAs, project managers, and product owners. GPT4o is the highest performance model, but it consumes more tokens.

The above example was generated on GPT 4o.

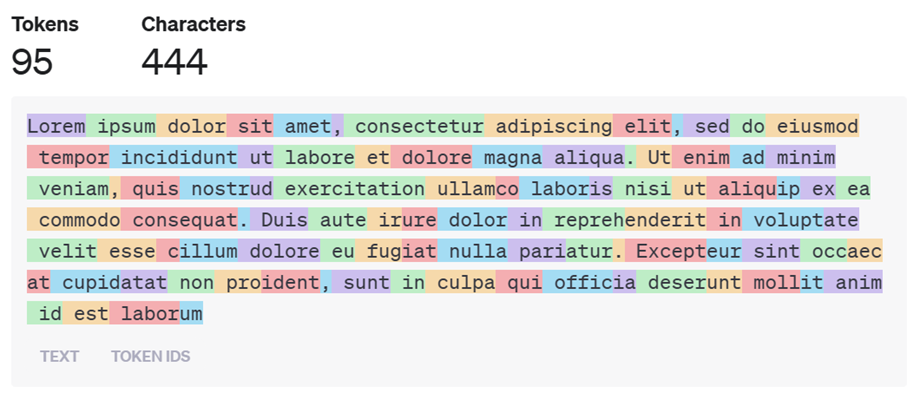

You can further explore AI token consumption on the ChatGPT Tokenizer tool.

The cutting edge of current generative AI technology has certain token limits.

The forefront of current technology and the model you choose limits your token consumption. The maximum number of tokens you can consume is called your context window. Here’s how it works for different GPTs with examples:

Typically, you will also see the number of tokens referred to as 4k, 8k, or 32k ChatGPT tokens available. These refer to the maximum number of tokens a model can handle in a single interaction or conversation.

In the case of 250,000 4k tokens, its breakdown is as follows:

250,000 is the total amount of tokens you can consume

4000 tokens are the limit per interaction

If you use 1000 tokens per interaction, you can have 250 interactions with the AI.

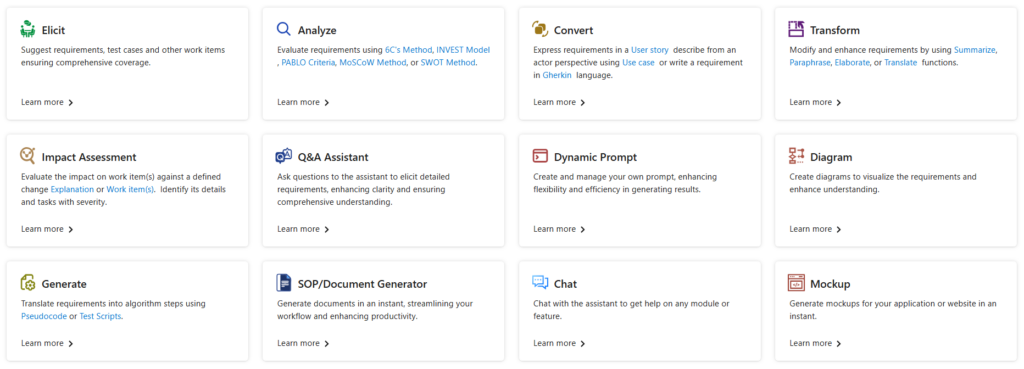

Copilot4DevOps Plus is a work item and requirements management solution to revolutionize the DevOps lifecycle. It’s also available as an upgrade to Modern Requirements4DevOps, an award-winning requirements management tool built into Azure DevOps. Using Copilot4DevOps Plus, your teams can save time, unload manual work, handle project complexity, and maintain top-tier security.

It offers you the following :

Copilot4DevOps Plus allows you to choose between GPT 4o and GPT 4o mini models, with corresponding token usage. The model you choose will determine how you give it inputs and what outputs you will get.

Copilot4DevOps Plus gives you 10 million tokens per month but higher token counts are available upon request in Enterprise applications.

So, you already have access to your preferred Copilot4DevOps Plus model. How do you optimize your output? In this case, optimize means balancing the best output and maximum token conservation. Here’s how to do it:

By understanding what tokens in AI are, you can choose the right AI model for your organization. AI requirements management tools like Copilot4DevOps Plus are a powerful new technology shaking up work item and requirements management. Companies that don’t follow these trends will fall behind.

Maximizing token efficiency involves concise prompts, judicious summarization, and strategic output formats. Elevate your AI interactions by grasping token dynamics and leveraging Copilot4DevOps Plus for seamless requirements management.

Ready to transform your DevOps with Copilot4DevOps Plus? Get a free trial today.